Fix “nvidia-smi failed to initialize NVML: Driver/Library Version Mismatch” Error

If you’re using an NVIDIA GPU and encounter the error “nvidia-smi failed to initialize nvml: driver/library version mismatch,” you’re not alone. This error message has frustrated many users across the board, especially those involved in gaming, deep learning, or heavy computational tasks. But don’t worry—this issue can be fixed, and I’ll walk you through exactly how to resolve it.

What is the Error, and Why Does It Happen?

Before diving into solutions, let’s understand what “nvidia-smi failed to initialize nvml: driver/library version mismatch” actually means.

- nvidia-smi stands for NVIDIA System Management Interface. It’s a tool used to monitor and manage NVIDIA GPUs, providing real-time data like temperature, fan speed, and memory usage.

- NVML is the NVIDIA Management Library, an API for monitoring and managing various states of your NVIDIA GPU.

When you get this error, it means there’s a mismatch between your NVIDIA drivers and the NVML library. In other words, either your GPU drivers are outdated, or the library (NVML) version installed on your system doesn’t align with your drivers.

Common causes of this error include:

- Installing or upgrading drivers incorrectly

- Partial installations of driver updates

- Conflicts between different versions of the CUDA toolkit and drivers

Solutions to Fix the Error

1. Check Your NVIDIA Driver Version

The first step in resolving the issue is making sure your NVIDIA drivers are properly installed and up to date. Run the following command to check your driver version:

nvidia-smiIf this command throws the “nvidia-smi failed to initialize nvml: driver/library version mismatch” error, then it’s almost certain you have a driver issue. Here’s what to do next:

2. Reinstall or Update Your NVIDIA Drivers

Sometimes, simply reinstalling or updating your drivers can fix the problem. You can download the latest drivers from the NVIDIA website.

For Linux users:

- Uninstall the current drivers by running:

sudo apt-get remove --purge '^nvidia-.*'- Then, reinstall the latest drivers:

sudo apt-get install nvidia-driver-<version-number>For Windows users:

- Go to Device Manager, find your NVIDIA GPU under Display Adapters, right-click, and choose Uninstall. Be sure to download and install the latest driver from the NVIDIA site afterward.

Make sure the new drivers match the version of the CUDA toolkit and NVML library on your system.

3. Verify CUDA Toolkit and NVML Compatibility

Sometimes, this error occurs because you have an incompatible version of the CUDA toolkit. If you’re using CUDA for deep learning or GPU computing tasks, you need to ensure that your toolkit version matches your driver version.

You can verify this by checking the CUDA version installed on your system using:

nvcc --versionCheck your CUDA compatibility with your driver version on NVIDIA’s official site, or refer to their compatibility matrix.

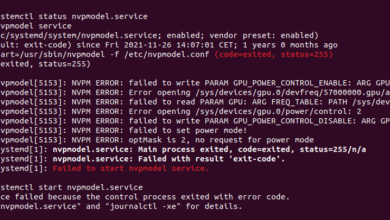

4. Restart the NVIDIA Persistence Daemon

In some cases, simply restarting the NVIDIA Persistence Daemon can solve the issue. This service helps manage multiple GPUs on a system and can occasionally malfunction.

For Linux, run the following commands to restart it:

sudo systemctl restart nvidia-persistenced5. Ensure Kernel Compatibility

For Linux users, kernel versions can sometimes break NVIDIA driver compatibility, especially after system updates. If the kernel version and the NVIDIA drivers are out of sync, you might face this error. Consider rolling back the kernel or reinstalling the drivers.

uname -rMake sure your kernel version supports your installed NVIDIA drivers.

Insights from Users Facing Similar Issues

Many users from forums and discussion boards have shared their experiences with the “nvidia-smi failed to initialize nvml: driver/library version mismatch” error. Here are some additional tips from the community:

- Multiple GPU setups: Users with more than one GPU often find that certain GPUs work while others don’t. Reinstalling drivers across all GPUs has proven helpful for many.

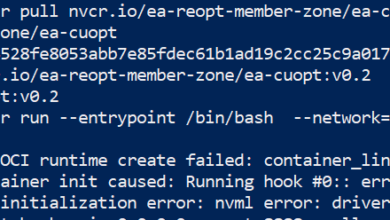

- Docker environments: If you’re running NVIDIA drivers within a Docker container, make sure the container is set up correctly with the proper GPU and NVML bindings.

- System updates: Several users have mentioned that errors appear after a major system update, especially in Linux distributions. To avoid this, it’s often recommended to lock your GPU drivers and kernel versions when everything is working fine.

Conclusion

The “nvidia-smi failed to initialize nvml: driver/library version mismatch” error is a common issue for NVIDIA GPU users, but luckily, it’s fixable. By updating or reinstalling your NVIDIA drivers, verifying CUDA compatibility, restarting persistence daemons, and ensuring kernel compatibility, you should be able to resolve the problem.

If the problem persists, double-check that there aren’t other software conflicts on your system, such as older versions of CUDA or kernel incompatibility. Hopefully, this guide helps you get your GPU up and running smoothly again!