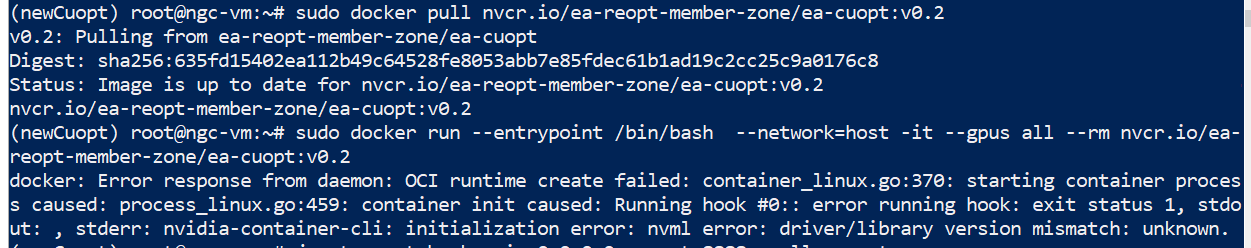

How to Fix “nvidia-container-cli: initialization error: nvml error: driver/library version mismatch: unknown”

If you’ve worked with NVIDIA GPUs on Linux-based systems, particularly in containerized environments like Docker, there’s a good chance you’ve come across the dreaded “nvidia-container-cli: initialization error: nvml error: driver/library version mismatch: unknown” error. It’s one of those issues that seem incredibly daunting at first glance, especially when you’re in the middle of a project, but once we break it down, it becomes much more manageable.

Let’s take a deeper dive into this error, what causes it, and how you can go about fixing it.

What is NVML and NVIDIA Container CLI?

To understand the root of this error, we need to know what NVML (NVIDIA Management Library) and the NVIDIA Container CLI (Command Line Interface) are. NVML is a library provided by NVIDIA that allows monitoring and management of various states of the GPU. It’s essential for interacting with the GPU, whether you’re monitoring temperature, memory, or performance stats.

The NVIDIA Container CLI, on the other hand, is a command-line tool used in container environments like Docker to manage GPU access. It ensures that containers can utilize GPU resources, which is crucial for workloads involving machine learning, AI, or high-performance computing (HPC). Now, when these two tools don’t align—when there’s a driver/library version mismatch—you get the error we’re talking about.

Why Does This Error Happen?

The “nvidia-container-cli: initialization error: nvml error: driver/library version mismatch: unknown” error typically happens when the versions of the NVIDIA drivers installed on the host machine do not match the version of the NVIDIA libraries inside the container.

Here are the most common causes:

- Driver Version Mismatch: This is by far the most common reason. If the host system’s NVIDIA driver version is different from what the container is expecting, you’ll see this error. The container may have an older or newer version of the NVML library compared to the host machine’s driver, leading to this mismatch.

- Incorrect NVIDIA Docker Setup: The setup of nvidia-docker (or more recently, NVIDIA Container Toolkit) might not be configured properly. This can happen if the toolkit isn’t updated or if there’s some configuration inconsistency between the container and the host.

- CUDA Version Conflict: Sometimes, different CUDA versions can cause issues. A specific version of CUDA requires a certain version of the NVIDIA driver. If the version of CUDA in your container doesn’t match the driver on your system, it can lead to this issue.

- Multiple Drivers on the Host: If you’ve accidentally installed multiple versions of the NVIDIA driver on your host machine (or upgraded/downgraded drivers without properly cleaning up), you might run into this problem.

How to Fix It?

Fortunately, there are several ways to address the “nvidia-container-cli: initialization error: nvml error: driver/library version mismatch: unknown” error. Let’s go over them step by step.

- Ensure Driver Compatibility:

- First and foremost, check the NVIDIA driver version installed on your host machine using the command:

nvidia-smi

This will output the driver version and the current state of your GPU. Make sure this driver version is compatible with the version of CUDA and NVML libraries your container is using.

- Check the Container’s Libraries:

- If the host driver is fine, check the CUDA and NVIDIA libraries inside the container. You can enter the container and run:

ldconfig -p | grep libcuda

Ensure that the libraries match your host driver version.

- Update NVIDIA Container Toolkit:

- If you’re running nvidia-docker, it’s a good idea to ensure that you have the latest version of the NVIDIA Container Toolkit. You can update it by running:

sudo apt-get update sudo apt-get install -y nvidia-container-toolkit sudo systemctl restart docker

This will ensure that your Docker setup is using the correct toolkit to manage GPUs.

- Rebuild Your Docker Image:

- Sometimes, the issue can stem from an outdated Docker image that still references old libraries. You can try rebuilding your image with the latest versions of CUDA and NVIDIA libraries.

- Restart and Reinstall Drivers:

- If all else fails, reinstalling the NVIDIA drivers on your host machine might help. Be sure to remove any previous installations properly:

sudo apt-get purge nvidia-* sudo apt-get install nvidia-driver-<version>

- Use Driver Containers:

- One handy workaround is using NVIDIA driver containers. These containers abstract the driver management, ensuring that your container always runs the correct version. You can pull a driver container from the NVIDIA NGC (NVIDIA GPU Cloud) repository and run it alongside your main container.

Final Thoughts

Dealing with the “nvidia-container-cli: initialization error: nvml error: driver/library version mismatch: unknown” error can be frustrating, especially if you’re in the middle of a time-sensitive project. But the key is understanding that it’s usually a mismatch between your host system’s NVIDIA drivers and the libraries inside your container. By ensuring that these versions are in sync and keeping your NVIDIA Container Toolkit up to date, you can often resolve the issue fairly quickly.

In a world where GPUs are critical for workloads like AI and machine learning, these sorts of problems can pop up often, but with a little patience and the right approach, you’ll be back to harnessing the power of your GPU in no time!

Pro Tip

If you’re working on a shared machine or cloud instance, always check the versioning details before starting your project. It can save you a lot of troubleshooting time!