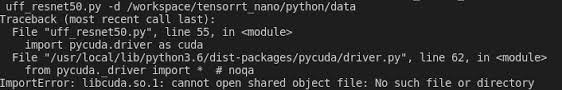

Solving the “importerror: libcuda.so.1: cannot open shared object file: No Such File or Directory” Issue

If you’ve stumbled across the error “importerror: libcuda.so.1: cannot open shared object file: no such file or directory”, it probably means you’re working with a setup involving CUDA, NVIDIA’s powerful parallel computing platform. You’re trying to run some machine learning or GPU-accelerated program, but boom—error pops up, and now you’re stuck.

Let’s break down what’s happening and how to resolve it, step by step.

What Does the Error Mean?

The “importerror: libcuda.so.1: cannot open shared object file: no such file or directory” error essentially tells you that your system can’t find a crucial CUDA library (libcuda.so.1). This library is key to enabling your program to communicate with your GPU. When it’s missing, your system can’t use the GPU properly, and the process will fail.

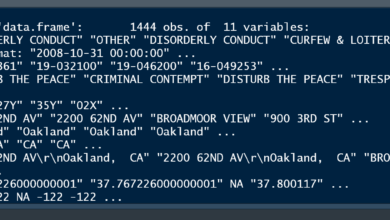

This error is particularly common when you’re:

- Installing or using TensorFlow or PyTorch with GPU acceleration.

- Working with Docker containers that don’t have proper GPU configurations.

- Using a virtual environment where CUDA or NVIDIA drivers aren’t correctly set up.

Possible Causes of the Error

There are a few reasons you might encounter this error, and each has a different solution:

- Missing or Incorrectly Installed NVIDIA Drivers

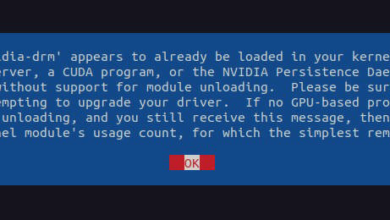

If your drivers aren’t installed correctly or you don’t have them at all, you’ll face this issue. Without NVIDIA drivers, your system can’t interact with the CUDA libraries, leading to the “importerror: libcuda.so.1: cannot open shared object file: no such file or directory” error. - CUDA Not Installed or Configured Properly

Even if your NVIDIA drivers are fine, the CUDA toolkit itself might not be installed properly. This can happen if you’re using an out-of-date version or simply forgot to install it. - Broken Symbolic Links

If you’re working with Docker or other isolated environments, you might run into symbolic link problems. This happens when your system expects to findlibcuda.so.1, but the link to it is broken or incorrect. - Improper Docker or Virtual Environment Setup

If you’re running your program inside a Docker container or virtual environment, the CUDA libraries may not be mounted or accessible, causing this error.

How to Fix the Error

Here’s a detailed breakdown of the solutions, depending on what caused the error.

1. Check NVIDIA Driver Installation

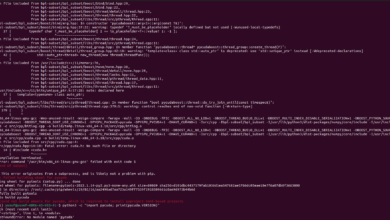

The first step is to ensure you have NVIDIA drivers installed. Open a terminal and run:

nvidia-smiIf this command works and gives you information about your GPU, your NVIDIA drivers are installed properly. If not, you’ll need to install or update them. You can do this by visiting the NVIDIA website and downloading the appropriate drivers for your GPU.

2. Verify CUDA Installation

After checking your NVIDIA drivers, the next step is to verify your CUDA installation. Run the following command:

nvcc --versionIf CUDA is installed, this will display the version number. If not, you need to install CUDA.

To install CUDA, use the package manager for your OS. For instance, on Ubuntu, you’d run:

sudo apt-get install nvidia-cuda-toolkitMake sure the installed CUDA version is compatible with your NVIDIA drivers.

3. Set Up the LD_LIBRARY_PATH

Sometimes, even after installing CUDA, the system can’t locate the libraries. You can fix this by setting the LD_LIBRARY_PATH to point to the right CUDA directory.

In your .bashrc or .zshrc, add the following lines:

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATHThen, run:

source ~/.bashrcThis will ensure the CUDA library path is set every time you start a new session.

4. Use nvidia-docker for Containers

If you’re using Docker, it’s crucial to run your containers with nvidia-docker, which allows you to use your GPU inside containers.

First, install nvidia-docker:

sudo apt-get install nvidia-container-toolkitThen, when running a container, use this command:

docker run --gpus all your_container_nameThis ensures that the container has access to the CUDA libraries and your GPU.

Common Challenges & User Feedback

Many users across forums like StackOverflow and developer forums have shared their frustrations and solutions to the “importerror: libcuda.so.1: cannot open shared object file: no such file or directory” error.

- Docker Users often report issues where their containers don’t have access to the GPU because they didn’t use nvidia-docker. The solution here is to ensure you use the proper runtime flags and have the nvidia-container-toolkit installed.

- Virtual Environment Issues arise when users install CUDA in the base system but forget to activate it within their virtual environments. A quick fix is to ensure CUDA is accessible inside the environment by manually linking the libraries.

- Symbolic Link Problems are another headache, particularly for people working with isolated environments. Sometimes,

libcuda.so.1points to the wrong file or directory. Fixing the broken symbolic link using thelncommand can save a lot of frustration:

sudo ln -s /usr/lib/x86_64-linux-gnu/libcuda.so.418.56 /usr/lib/x86_64-linux-gnu/libcuda.so.1This creates a link to the correct library.

Final Thoughts

The “importerror: libcuda.so.1: cannot open shared object file: no such file or directory” error might seem daunting at first, but it’s manageable once you break it down into smaller parts. Ensuring your NVIDIA drivers, CUDA, and environment configurations are in sync is key to solving the issue.

When in doubt, start with the basics—check your drivers, verify CUDA, and don’t overlook the importance of symbolic links. Happy coding, and may your GPUs always be in top shape!