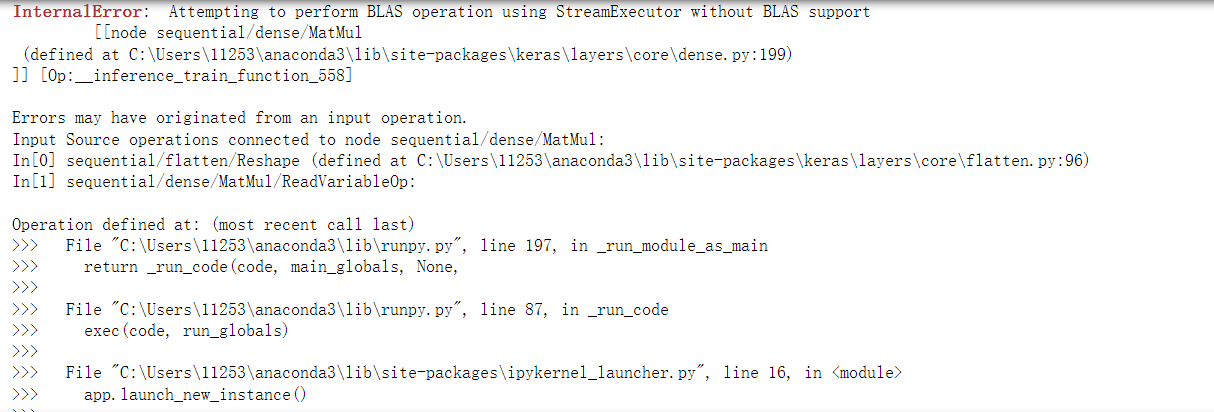

“Attempting to Perform BLAS Operations Using StreamExecutor Without BLAS Support” | Challenges and Solutions

When working with high-performance computing, BLAS (Basic Linear Algebra Subprograms) is a well-known library used to handle operations like vector and matrix multiplications efficiently. BLAS support is a key feature for optimizing these types of calculations, especially for applications in fields like machine learning, data science, and simulations. But what happens when you’re using StreamExecutor to perform BLAS operations, but BLAS support isn’t available? This situation can be a bit tricky and frustrating, but it’s not impossible to manage.

What is StreamExecutor?

Before we dive into the main issue, let’s clarify what StreamExecutor is. StreamExecutor is an abstraction layer used in frameworks like TensorFlow to interface with hardware accelerators, such as GPUs or TPUs. It makes it easier to offload computations to these devices, allowing them to handle tasks that would otherwise slow down the CPU.

Now, here’s the catch: StreamExecutor isn’t a replacement for specialized libraries like BLAS. It’s more of a tool to manage how computations are executed across different devices. It doesn’t come with the built-in functionality for optimized linear algebra operations—this is where BLAS would typically come in. So, when you’re attempting to perform BLAS operations using StreamExecutor without BLAS support, you’re left without the optimizations that make those operations efficient.

The Issue: No BLAS, No Problem? Well, Not Exactly…

The absence of BLAS support can lead to some noticeable drawbacks when you’re trying to execute certain high-performance tasks. Operations like matrix multiplications (which are the backbone of machine learning models) might work, but they’ll be slow and resource-hungry. This is because, without BLAS, you’re relying on general-purpose implementations rather than the highly-optimized versions that BLAS provides.

Let’s use an analogy here: Imagine trying to make a smoothie, but instead of using a high-powered blender, you’re just stirring the ingredients with a spoon. Sure, you’ll eventually get something resembling a smoothie, but it’s going to take longer and won’t be as smooth!

What Happens Under the Hood?

When you’re attempting to perform BLAS operation using StreamExecutor without BLAS support, several things happen:

- Fallback to CPU-based implementations: Without BLAS, your program might default to using CPU-based algorithms for matrix operations. These are much slower compared to GPU or TPU-accelerated computations.

- Increased computational load: Because the computations aren’t optimized, the system will need more resources to perform the same tasks. This can lead to slower execution times, higher energy consumption, and even system overheating in extreme cases.

- Degraded performance on large datasets: For small operations, you might not notice much difference, but when dealing with large datasets or complex models, the lack of BLAS support will severely limit your system’s performance. It’s like trying to build a skyscraper with hand tools instead of using cranes and bulldozers.

User Experiences: A Mixed Bag

When you browse through forums and reviews, you’ll see a lot of mixed reactions to this issue. Some users express frustration when they realize that StreamExecutor doesn’t automatically come with BLAS support and have to deal with slower performance. Others are more understanding, knowing that StreamExecutor is meant to complement hardware acceleration, not handle the heavy lifting itself.

Here are some of the key takeaways from user feedback:

- “I didn’t realize I needed BLAS until my matrix operations were taking forever.” This is a common theme among users. Many assume that StreamExecutor will just handle everything efficiently, only to find out later that they need to install BLAS libraries or look for alternatives.

- “I had to manually install BLAS support, and it was a bit of a hassle, but it was worth it for the performance boost.” Those who have been able to install BLAS support report a significant improvement in execution speed and overall performance.

- “I thought my GPU was broken because my models were running so slow. Turns out, I was just missing BLAS!” Misunderstandings like this are also quite frequent. Users who are unfamiliar with how BLAS works often assume that hardware issues are to blame when, in fact, it’s a software limitation.

Workarounds and Solutions

So, what can you do if you find yourself attempting to perform BLAS operation using StreamExecutor without BLAS support?

- Install BLAS: This is the most obvious solution. You can install libraries like OpenBLAS, Intel MKL, or cuBLAS (for NVIDIA GPUs) to regain the performance you need. These libraries are optimized to handle linear algebra operations at lightning speed.

- Use alternative libraries: If installing BLAS isn’t an option for you, there are alternative libraries like Eigen or Armadillo that come with their own optimized implementations of linear algebra functions.

- Rely on CPU for small tasks: If you’re dealing with small datasets or relatively simple operations, it might not be worth the hassle to install BLAS. In these cases, relying on CPU-based operations is a viable (albeit slower) option.

- Consider upgrading your hardware: If you’re consistently running into performance issues, it might be worth considering upgrading your hardware to one that is better optimized for high-performance computing tasks. GPUs and TPUs that come with native BLAS support can make a world of difference.

Conclusion

In summary, attempting to perform BLAS operation using StreamExecutor without BLAS support is a challenge, but it’s not insurmountable. While you may experience slower performance and increased computational load, there are solutions available, ranging from installing optimized libraries to upgrading your hardware. The key is understanding how BLAS and StreamExecutor work together and ensuring that you have the right setup for your specific needs.